Creating a remote BitTorrent box with Terraform, Transmission and Docker on AWS

10 July, 2019

My head has very much been in the DevOps space lately as I've been more and more hands on with AWS. I got thinking about an idea I had some time ago... What would be involved in running an ephemeral BitTorrent client on AWS with downloads automatically saved to S3?

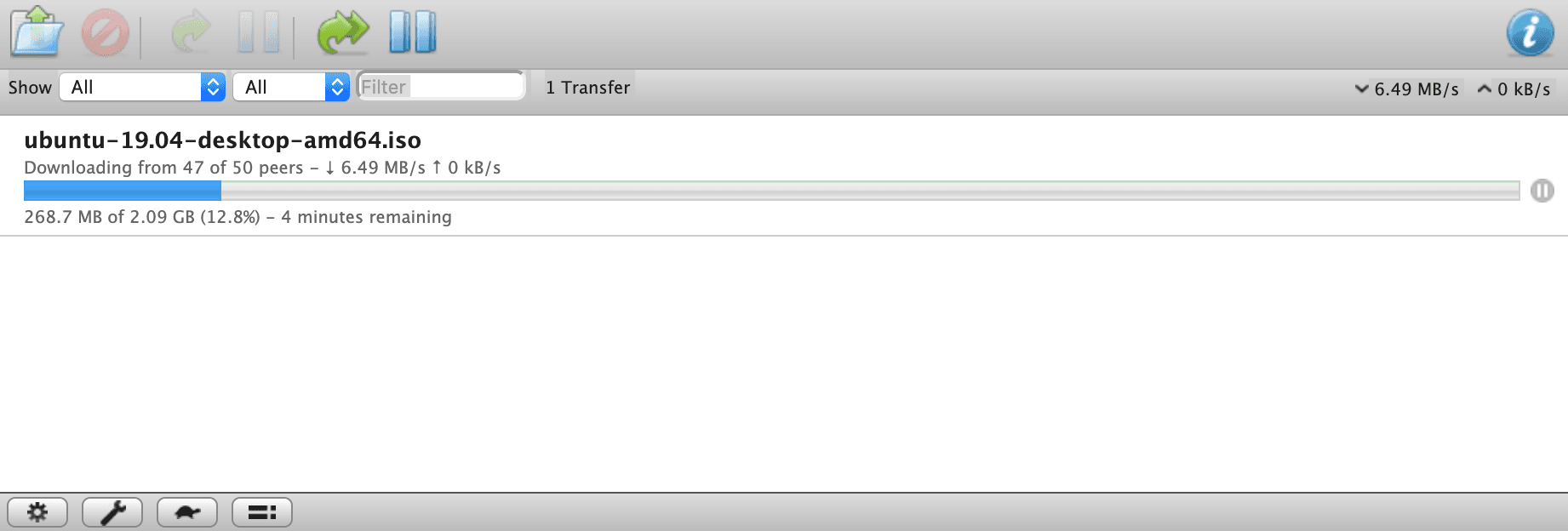

My ultimate goal was to be able to spin up and tear down a remote BitTorrent client all from the command line. I knew I wanted to run Transmission as I had used it in the past as it has a web interface for adding and removing torrents and monitoring downloads.

VPN

As I'm hosting this in the cloud I really wanted the BitTorrent traffic to route via a VPN rather than directly to the host instance. When I first started experimenting with this idea I begain by spinning up a t2.micro instance via the AWS console, connecting via SSH and installing OpenVPN directly on a host. I quickly discovered the hard way than starting OpenVPN while connected to the host over SSH wouldn't work as I guess by default it takes over the host's network traffic, immediately dropping my SSH connection and bricking the server until it's rebooted via the AWS console.

This lead me to Docker. Having OpenVPN containerised felt like a more sensible solution as it would allow me to SSH into the host instance to tinker with the setup. I figured someone must have already created an OpenVPN docker image and after some googling I found that github user haugene had created an aptly named image, docker-transmission-openvpn, which bundles both OpenVPN and Transmission together. Bonus!

Syncing completed downloads to S3

Once downloads complete Transmission copies files to the /data/completed directory. I needed to figure out a way to automatically copy completed downloads to S3. For this I decided to write my own utility in Go which would simply watch the contents of a directory for new files and upload them to an S3 bucket. This utility is called go-watch-s3 and I have a dedicated blog post about this here.

Both the Transmission container and the container running go-watch-s3 share a bind-mounted directory so when Transmission wrote files to it's /data/completed directory go-watch-s3 would see the new files and upload them. Pretty neat!

Provisioning the instance with Terraform

The provisioning is handled by a Terraform configuration which sets up our infrastructure. It creates the EC2 instance, a security group so we can access the Transmission UI, and the appropriate IAM role, policy and instance profile so the instance is able to write files to our existing S3 bucket.

Installing software on the instance is handled as part of a user data script which runs when the instance first launches and is defined as part of the aws_instance resource type in Terraform. This script is actually relatively simple as it just installs Docker, pulls the above mentioned images and runs them. I had considered using Docker Compose as it's usually a nicer experience when orchestrating multiple containers but went ahead with vanilla docker run commands instead.

Finally, all that's left to do is to apply the configuration with terraform apply to create our resources on AWS. Once the configuration is applied the url of the Transmission web UI will be output however you will need to wait a few minutes for the startup script to do it's thing. Once the UI is available torrents can be added with completed downloads auto-magically copied to S3!

Once you're finished, destroying the infrastructure you just created can be torn down with terraform destroy.

Stating the obvious

In summary I feel like this was a fun 'toy' project though I don't personally feel the need to improve it further. The itch has been scratched if you will. To see the complete Terraform configuration check out terraform-transmission-aws on github.

While there is nothing illegal about BitTorrent from a pure technology standpoint, pirating content may well fast-track you to a reprimand from AWS or worse your account closed.